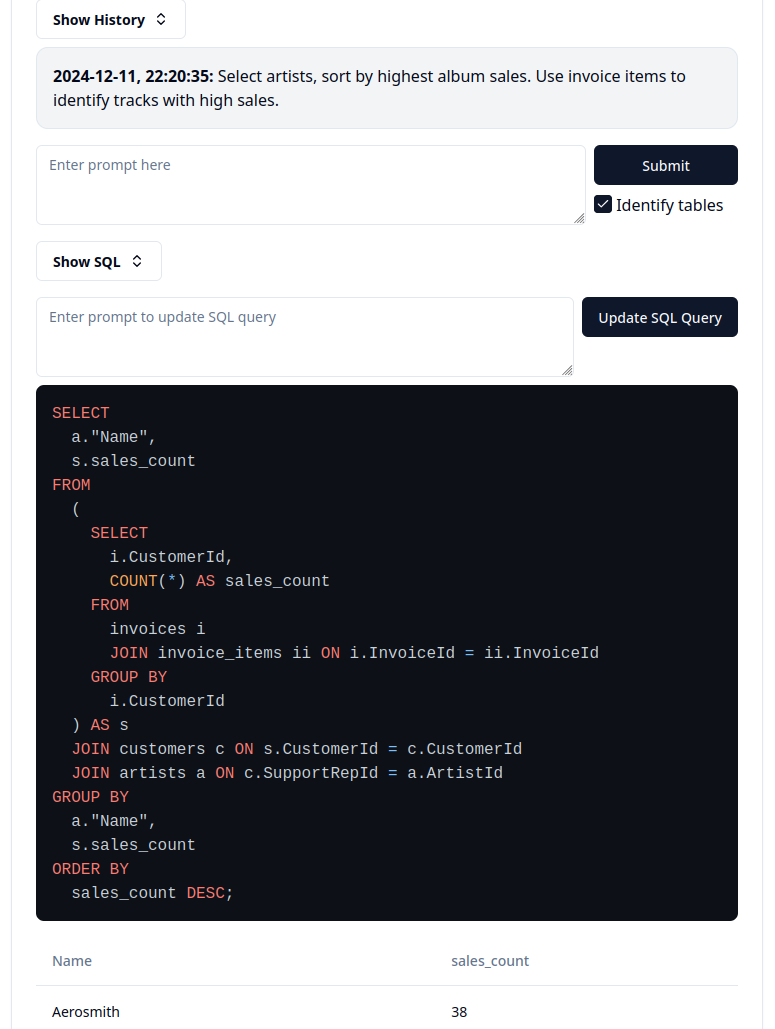

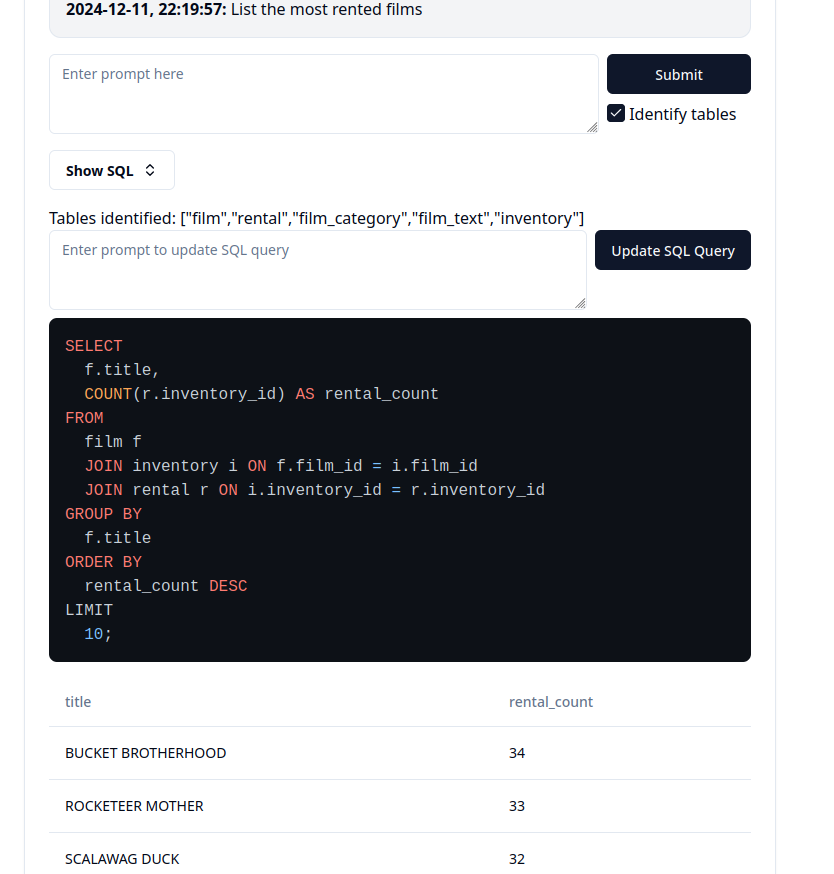

Example generating SQL query

Example generating SQL query

AI is very popular right now, but is it actually useful? Are LLMs reliable enough to solve problems beyond chat bots? I decided to create this demo project to practice developing an AI product and test one of these ideas: LLMs generated SQL queries.

Instead of struggling to figure out which of the 5 left joins gets you the data you want, just let the AI figure it out. A non technical coworker is trying to get data? Just let AI generate the query.

This idea isn’t new, the model that I ended up using for this project, @cf/defog/sqlcoder-7b-2 is from defog.ai, one of many startups creating AI products in this space. I don’t feel like AI is quite there yet, or at least needs to be secondary to the rest of the product.

Regardless, here is what I built.

Links:

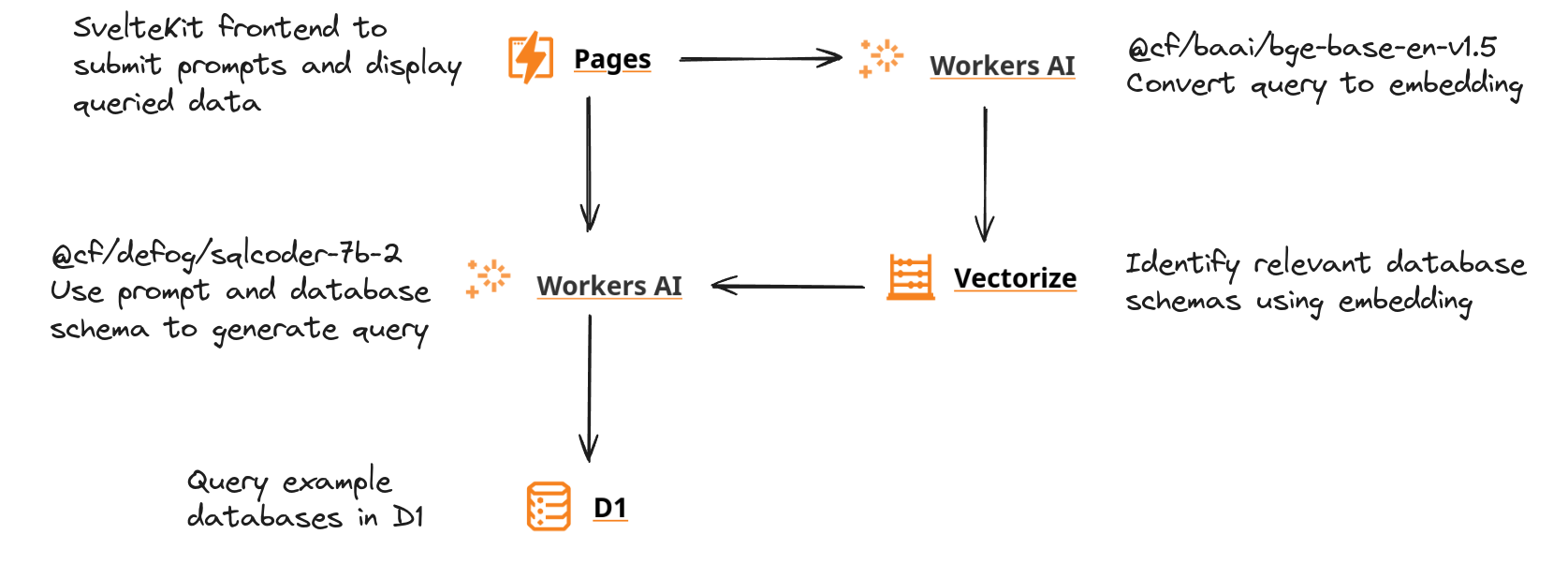

Architecture

Cloudflare resources used for demo ai db query

Cloudflare resources used for demo ai db query

SvelteKit

The frontend is a SvelteKit site deployed to Cloudflare Pages. This was a small project, so it was a single page app a few forms. All of the UI is from shadcn-svelte, which I highly recommend.

To properly display SQL on the frontend, I used sql-formatter and svelte-highlight. This would be applied to SQL from database schemas and SQL generated by the AI.

<script lang="ts">

import sqlLang from 'svelte-highlight/languages/sql';

import Highlight from 'svelte-highlight';

import { format } from 'sql-formatter';

// ...

</script>

<!-- lots of other components here... -->

<Highlight

class="overflow-hidden rounded-lg "

language={sqlLang}

code={format(dataRow.sql, { language: 'sqlite' })}

/>Workers AI

The LLMs used for this application were from the Cloudflare model list because it was the easiest option.

@cf/defog/sqlcoder-7b-2 is a text generation model trained for understanding SQL. I ended up finding that this model performed much better than other text generation models such as llama-3.1-70b-instruct. The standard instruct models seemed to be focused on chatting and would often ignore the prompt. Other models for coding such as deepseek-coder-6.7b-base-awq messed up SQL queries a lot.

Here is what I used for the prompt. I found “prompt engineering” extremely annoying, you never really know if you are making an improvement.

const messages = [

{

role: 'system',

content:

"You are a model that writes SQL queries. Return a SQL query which meets the user's request.\n" +

'Use SQLITE dialect. IMPORTANT: Only return SQL. \n' +

'Queries written must be valid for the following database schema: \n' +

dbSchema

},

{

role: 'user',

content: prompt

}

];@cf/baai/bge-base-en-v1.5 is a text embedding model and was used to convert the prompt and database table schemas to embeddings. I didn’t bother comparing different models for generating embeddings, however, I think this is likely the most useful application of AI right now.

Vectorize

Vectorize is Cloudflare’s vector database and was used to store embeddings of each table schema. Each database would be a different namespace and each table schema in the database would be stored in embedding metadata.

The closest matching table schemas from the query would be passed into the context for the LLM generating the SQL query.

// ...

const returnedMatches = await platform.env.VECTORIZE.query(queryEmbedding.data[0], {

namespace: dbParams,

returnMetadata: 'indexed'

});

matchedTables = returnedMatches.matches.map((m) => m.metadata!.table as string);D1 Database

Showcase with of multiple databases

Showcase with of multiple databases

As part of the demo, I wanted to be able to test different SQLite databases.

After realizing that Cloudflare Pages doesn’t work with better-sqlite3 (some issue with fs), and spending way too much time messing with overly complicated solutions, I gave up and just used Cloudflare D1.

Originally, I wanted users to be upload their own SQLite database, but that was too complicated. I ended up using 3 databases, Chinook, Sakila, and Northwind Traders. These are “benchmark” databases, so it likely makes it easier for the LLM.

Here is where I found the SQLite databases:

- https://www.sqlitetutorial.net/sqlite-sample-database/

- https://github.com/bradleygrant/sakila-sqlite3

- https://github.com/jpwhite3/northwind-SQLite3

Issues Working with AI

The LLM doing a nonsense join

The LLM doing a nonsense join

Although many models have large token limits. I noticed that with massive prompts, the LLM seems to lose focus. It is possible to limit context using RAG through a vector database, however, not all context may be captured.

The LLM sometimes generates nonsense queries. It is possible to try and feed the error back in and prompt the AI again. However, like many of these solutions, it feels like a workaround.

Working with LLMs feels like gambling. Random, low effort, and a chance of reward. There is always a chance that the next prompt generates the right response, but it is more likely you are just wasting your time.

Conclusion

AI works for basic queries

AI works for basic queries

The end result is interesting but I don’t know if it is useful. I feel like there are so many edge cases. Or situations where the LLM requires so much context that you might as well write the query yourself.

I’m sure there are ways to push this further using larger models, multiple queries, or chain of thought. But I’m not sure if this complexity is worth it.